The Energy Challenge in AI Development

TLDR: Looking at these huge numbers, which does not even include inference, every company needs to evaluate their usage of LLM and how they use them going forward.

I’ve been conducting some research on large language models and their power consumption because I want to write a couple of articles on adjusting and improving our thinking around sustainability and AI. Yesterday, Mark Zuckerberg mentioned in a thread that Meta will invest in 350 000 H100 Nvidia GPUs. According to Mark, Meta will reach an equivalent of 600 000 H100 GPUs.

If we examine their investment, it’s around 9 billion USD at the very least.

Now, what’s interesting for me is how much power these GPUs will consume.

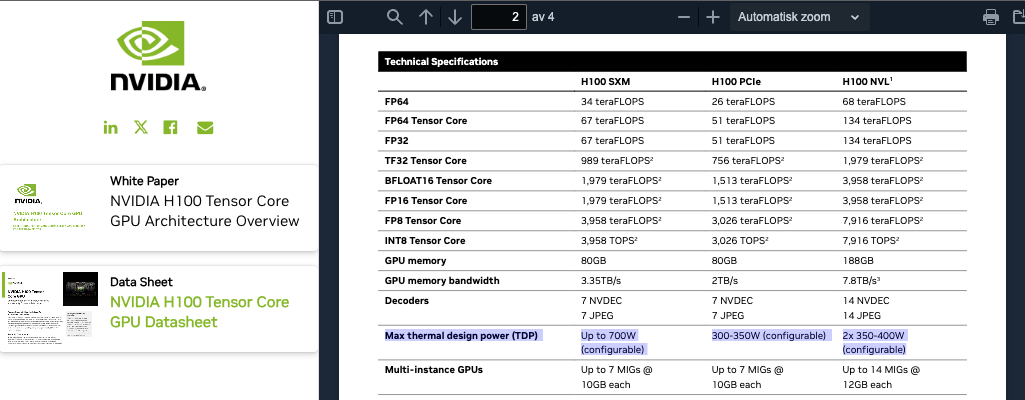

Well, looking at the Nvidia specifications, they have a thermal design power (TDP) of 400W each.

ChatGPT-4, trained on 25 000 A100s with a similar TDP, consumed around 24 000 000 kWh during 100 days of training. And this doesn’t even include the surrounding infrastructure like cooling, CPUs, etc.

For 600 000 H100 GPUs, this would imply a 25x increase in energy consumption, resulting in a staggering 600 000 000 kWh for a 100-day training period.

Now, this is a complex situation. Metas LLaMa models for example, are more effiecient than GPT-4. We also do not know if they will turn of the clusters between operations (highly unlikely?). With the larger models, inference quickly outnumber even the training phase.

My point is: Looking at these huge numbers, which does not even include inference, every company needs to evaluate their usage of LLM and how they use them. Next week I will publish advice on how to optimize this.

And I know, the statements from Mark yesterday is MASSIVE and there is much to unpack here.

Screenshot: Mark Zuckerberg on Threads

“The Carbon Impact of Large Language Models: AI’s Growing Environmental Cost“ by Archana Vaidheeswaran

https://www.linkedin.com/pulse/carbon-impact-large-language-models-ais-growing-cost-vaidheeswaran-fcbhc/

Nvidia H100 datasheet:

https://resources.nvidia.com/en-us-tensor-core/nvidia-tensor-core-gpu-datasheet

Mark on threads:

https://www.threads.net/@zuck/post/C2QBoRaRmR1

Did you know I wrote one of my Bachelor thesis in Lund University on PC energy consumption? 🙂 https://lup.lub.lu.se/student-papers/search/publication/1336368